At Wayfair, we recommend millions of products across all styles and budgets. Because of the scale of our catalog, we constantly ask ourselves: how can we help our customers find the right products efficiently while discovering exciting complements to fill out their space?

Introduction

In this article, we want to shed some light on a robust model called Hedwig used as a standalone recommendation system in multiple places where customers interact with Wayfair. Our Hedwig recommendation model helps customers throughout their entire journey, from email recommendations to suggestions on the home page, retargeting ads, and even push notifications.

Background

The Hedwig model supersedes a legacy model trained on customer co-clicks to find related products using the Jaccard Similarity Index. Hedwig offers three crucial advantages compared to our legacy approach.

- First, the model is scalable. The legacy model relied on pairwise calculations, which introduced severe computational costs as our catalog continues to grow.

- Second, relying solely on co-clicks data can lead to problems, including issues with click-bait and popularity bias. Previously recommended Items were privileged to be shown again in future recommendations. Hedwig addresses these issues by incorporating higher intent customer-SKU interactions to bridge the gap between clicks and user satisfaction.

- Third, instead of serving average recommendations across all use cases, Hedwig provides unique recommendations for different use cases.

In short, Hedwig as a recommendation model offers scalability and flexibility.

Model Overview

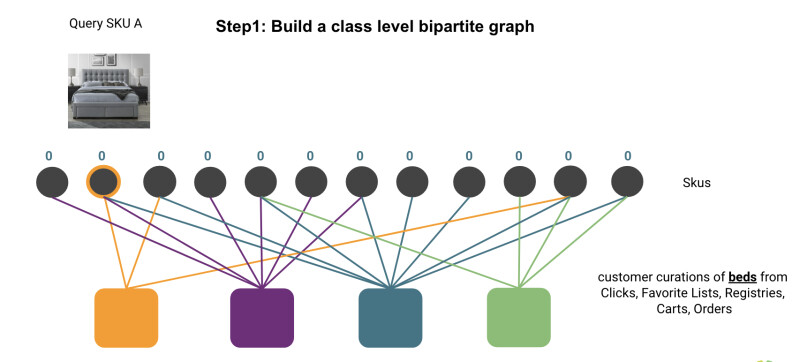

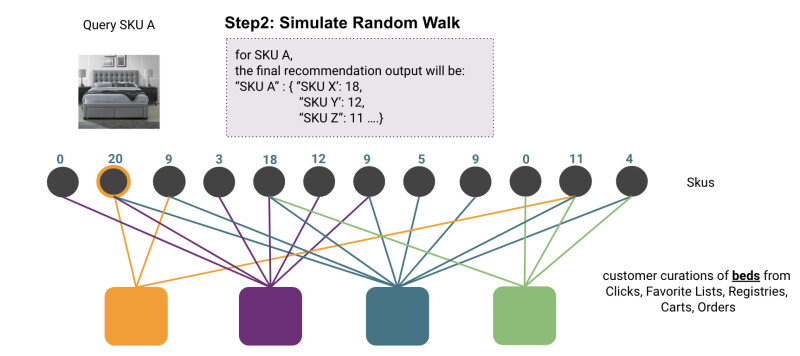

Hedwig is a recommendation model for fetching related furniture and home goods, given an example item. The Pixie Random Walk algorithm inspired the creation of our model. We create a bipartite graph by incorporating data on how customers interact with products to power the model. The two entities in the bipartite graph are SKUs and customer curations. A customer curation is essentially a set of SKUs curated by our customers, including session-level clicks, favorite lists, registries, carts, and orders. We simulate random walks on the graph to build the desired recommendations.

The Intuition behind the Random Walk Algorithm with Restart

The critical step in our algorithm is the graph traversal. By traversing the graph, we assume that customers who add the same SKUs to their curations are likely to share similar traits and style tastes. Products curated by the same person also tend to be similar. The algorithm mimics the recursive nature of the problem itself.

More concretely, starting from the SKU we want to make recommendations for, we first take a random step forward from the SKU to any customer curation linked together with an edge and then a backward step to any SKUs connected to the customer curation. We repeat the same process multiple times. In this fashion, the model learns the SKUs that are most closely associated with the query SKU. Typically, we restrict our attention to only the top-K most frequently visited SKUs, and they become the model’s recommendations for the query SKU.

We treat the number of steps to take before restarting the process as a tunable hyperparameter. We observe that one to three steps are sufficient. Intuitively, this is because each successive step reduces the similarity between SKUs. Note that slightly larger step sizes may be used to facilitate SKU discovery. Therefore, if you value helping customers discover novel SKUs over highly related products, you can set the number slightly larger.

Adaptation 1: Leverage Both Long Term and Short Term Signals

Building on the backbone of the Pixie model, we make several adaptations for Wayfair. The first change is on the training data source. Hedwig leverages both short-term and long-term customer shopping behavior. For short-term signals, we blend in customer curations at different purchase stages into our training data. Our first-tier candidates are lower funnel customer curations such as idea boards, registries, carts, and orders, which are sets of SKUs that our customers carefully select. To boost the model’s SKU coverage, especially for Wayfair’s smaller brands, we also incorporate customer session-level clicks as a second-tier training data source.

In the meantime, we found that customers who shop for furniture at higher price points often require longer consideration times (multiple sessions) before they finally make a purchase. For this reason, we link SKUs across the customer journey leading up to purchase to better capture the customer’s consideration cycle over a relatively long-term period.

Adaptation 2: Biased Random Walk to Mitigate Popularity Bias and Click Bait

In our first model iteration, we sampled SKUs uniformly from a customer curation during the random walk. While overall v1 model performance significantly outperformed our legacy approach, we observed some degree of popularity bias and clickbait SKUs surfacing in our Hedwig recommendations, undermining the user experience.

We proposed to solve this problem by changing our sampling logic. Instead of sampling every SKU with equal weight, we weighted based on the product of the TFIDF and post-click ATC rate of each SKU (the likelihood a customer would add-to-cart a product after they click on the product detail page). For the TFIDF calculation, we calculate the TF using the SKU clicks in each customer’s curations individually and the IDF using the overall SKU frequency across all customer curations.

This new approach to weighing SKU edges resulted in a lower likelihood of sampling from clickbait as the post-click ATC term emphasizes the lower funnel SKU performance. At the same time, it increases the model robustness for generating truly relevant recommendations for our customers. The result is that while popular SKUs are still dominant in our curations graph, we reduce the likelihood of sampling these popular products unless they have received significant attention (clicks) from a given customer.

Adaptation 3: Distinguishing Substitute and Complementary products

It is essential to understand how products relate to each other with different customer intents in a modern recommender system. For example, while a user is searching for a sofa, it may make sense to recommend similar-looking sofas, futons, or even sectionals. But once they add to cart or purchase a sofa, we may instead want to suggest complementary items such as ottomans and coffee tables. These two types of recommendations are referred to as substitutes and complements, respectively.

At Wayfair, each product is labeled with a class, ranging from a sofa, table lamp, refrigerator, gazebo, and many more. There are both in-class substitutes and cross-class substitutes, but complements are always cross-class.

The implementation of the in-class substitute Hedwig model is relatively straightforward. Rather than creating a large graph containing the entire catalog and biasing the random walk towards same class products, we build small bipartite graphs on the class level. This enables us to parallelize model training drastically. As for cross-class substitutes, we won’t expand on the topic in this post, but you can check out a previous blog post for more information on how we group together substitute classes.

The problem of complementary recommendations is slightly more complex since we need to distinguish cross-class substitutes from cross-class complements. We derive anchor class and complementary class relationships from historical order data. We first calculate a Term Frequency (TF) score to represent how often two classes are co-ordered. Then, we penalize popular SKUs by applying an inverse document frequency (IDF) term to penalize dominating complementary classes.

We use model hyperparameters to set thresholds on the minimum value of co-orders, TF, and IDF for a complementary class to be considered. We have observed that without the IDF term, the most co-purchased classes of any anchor class will have a high chance of being dominated by our best-selling categories at Wayfair, such as area rugs and bedding. We also found that applying the IDF term can help us more accurately identify complementary classes. The TFIDF score determines the final ordering of the complementary classes. The higher the TFIDF score, the stronger the complementary relationship between the two classes.

After we identify complementary class pairs, we train the model at the class-pair level. In other words, we build a graph for each complementary class pair. Training models at the complementary class pair level has the additional advantage of speeding up the training process via parallelism. Additionally, this approach prevents long-tailed classes from being underrepresented in a larger, unified graph.

Adaptation 4: Overcome the Cold Start Problem

A cold start happens when new products arrive on e-commerce platforms. It’s a known problem for any collaborative filtering model since this type of model relies on the item's interactions to make recommendations. At first, we considered using a content-based model as a direct fallback strategy for Hedwig to solve the problem. However, we found that content-based models are likely to recommend SKUs with fewer reviews, contrary to customer preferences.

We leverage our in-house content-based product similarity embeddings to address this problem, built using imagery, product descriptions, and other product features. These embeddings help us find substitutes for SKUs with low or no visibility in the Hedwig graph. We will first retrieve the top 10 nearest neighbors in the product similarity embedding space for these low visibility SKUs. We then examine if any of the ten neighbors are already in our bipartite graph. Once we find a neighbor in the graph, we’ll insert this low visibility SKU into the same customer curation as its neighbor. We only allow these low or no visibility SKUs to be inserted into a maximum of two customer curations. We found that if we insert a new SKU too aggressively, we disrupt the original graph structure, which leads to worse performance in our offline tests.

We Drive Results

Hedwig’s flexibility and scalability have proven themselves at Wayfair. To date, three versions of the Hedwig model have been productionized. These include an in-class substitutes model, a cross-class substitutes model, and a cross-class complementary model. The latest iteration of the Hedwig model also incorporates product similarity embeddings to further improve coverage for SKUs without customer interactions, improving the implicit feedback weights in the graph and expanding out the training data to capture relatively long-term signals. Our recent online A/B tests have shown that the new Hedwig models drive better customer engagement and bring more incremental value across a variety of placements on all Wayfair stores!

We are Never Done

Today, Hedwig is still more widely used as a stand-alone recommendation model for different touchpoints. The next item on our checklist is to integrate it with our existing single-class ranking model and start building a personalized cross-class ranking model to continue to improve on the user experience. In the meantime, we’re looking to scale the granularity of our recommendations to specific product options rather than top-level products. We hope that these more detailed recommendations will delight our customers.