Introduction

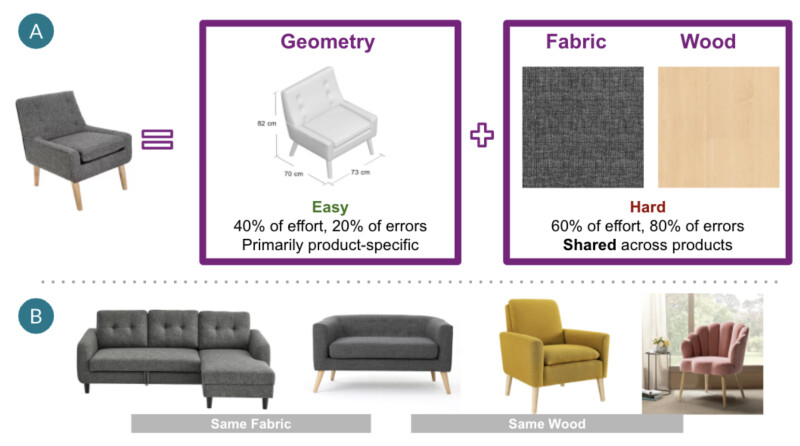

3D models are composed of two parts: geometry and materials. For example, the geometry in Figure 1A captures the detailed structure of the chair. Once the geometry is created, artists make corresponding 3D materials (e.g. upholstery and wooden legs) and apply materials on the geometry to create a 3D model of the chair. Creation of 3D materials is more time-consuming and more prone to human errors due to rich features such as texture, gloss, etc. However, unlike geometries, the same materials are usually shared across products (Figure 1B). If we can reuse the same 3D material, the cost and time of 3D material creation will be significantly reduced. Our mission on the Data Science-3DOps team is to unlock material matching across the catalog to allow material reuse and drive efficiency in 3D material creation.

There are three key challenges in solving material matching problems.

- High standards for matching: Our in-house artists evaluate material similarity from a 3D material creation perspective. Matches need to capture many material-related features including texture, color, gloss, bumps, etc. These multidimensional requirements make it a challenging problem to tackle.

- Minimal or No training data: Only in a few cases can we obtain the ground truth of when two products share the same material. Specifically, across suppliers it is very hard to get the ground truth of material matches.

- Generalization to never seen before materials: While we have more than 22 million products in the catalog, new products are still being added to our site daily. Therefore, the final model needs to generalize well to account for new materials that it has never seen before.

In this blog, we will cover two types of materials that are very common in 3D modeling: upholstery and metal. Our approaches for finding similar upholstery and metal materials are different due to the nature of the material itself and available data.

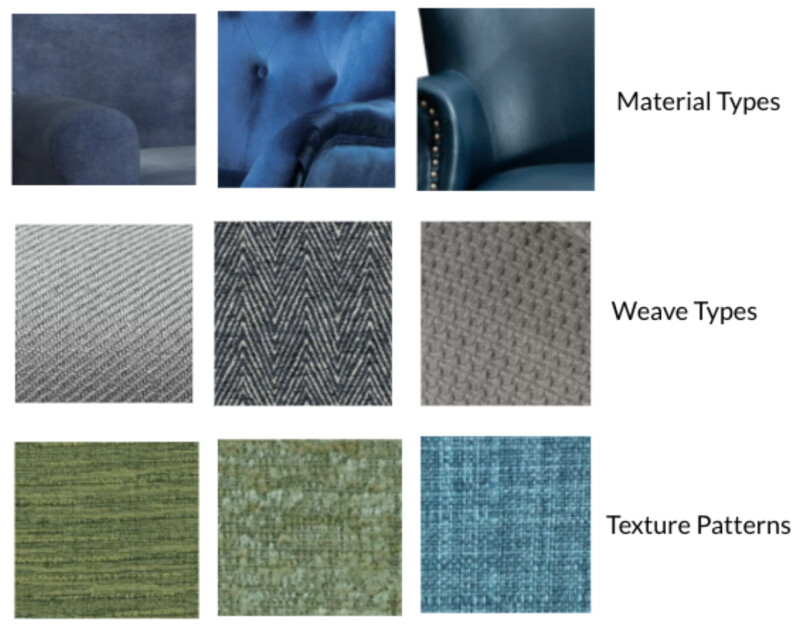

- Upholstery materials have diverse attributes such as weave types, texture patterns, and shine that usually are intertwined together to form a unique appearance (Figure 2). For texture patterns, there are so many variations that building an upholstery pattern taxonomy is nearly impossible. This makes it difficult to build a classification model to tag similar materials. Therefore, we cast it as a metric learning problem to learn material similarity from images. Metric learning is a supervised task of learning a distance function that measures how similar or related two objects are and has been widely used in industry such as face recognition [1, 2]. By feeding in examples of similar and dissimilar pairs, a machine learning model is trained to generate a latent representation (embedding) and the distance between embeddings can measure material similarity. Similar materials should be closer to each other while different materials should be apart in the embedding space. These embeddings can help identify upholstery matches given images of any two materials.

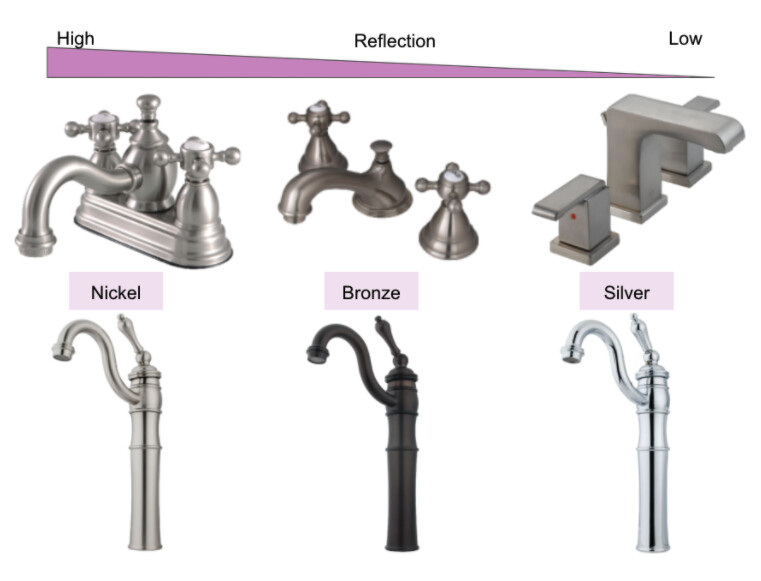

- Metal materials have relatively fewer features that determine reusability than upholstery. In particular, our in-house 3D artists have identified several important features, e.g. gloss, reflection, finish, and color. Figure 3 shows a few products of different reflections and colors. In order to find matches for metal material, we first developed models to extract these features from product images and then cast it as an unsupervised clustering problem.

In the following sections, we will dive into how we find matches in these two material types, including available data sources, model details, sample results, and future directions.

Matching Upholstery Materials

Ground truth upholstery match dataset

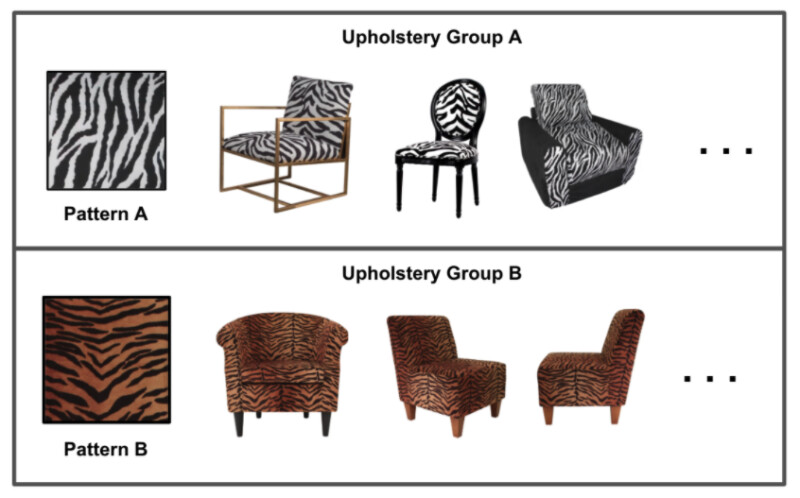

Metric learning requires similar and dissimilar pairs to train models. We leveraged a material swatch dataset that associates products with the same upholstery material together. As you can see in Figure 4, all products in Upholstery group A share the same upholstery material, including all attributes such as color, pattern, material type (fabric) etc. We can generate similar pairs by picking products from the same group. Products drawn from different groups can be treated as dissimilar pairs. In addition to these positive and negative pairs, we also need to know the location of the materials in the product images. Here, we leveraged a set of human-drawn bounding boxes that help us segment the materials and we can feed these material crops as input to our models.

Building a material matching model

We first built a baseline material matching model using a pre-trained deep learning model, Resnet-50 which is an extremely deep neural network with top performance in object classification[3]. The second last layer from Resnet-50 contains the rich information that was extracted from feature space and serves as input to the final classification layer. Therefore, we directly utilized the second last layer as our embeddings to represent materials. Our baseline method identified nearest neighbors as matches in the embedding space and achieved 13% matching accuracy (matching rate is 0.2% for a random pair in the same material type such as Leather). However, Resnet-50 heavily focused on edges and high level features as shown in the identified matches as opposed to the material specific features (Figure 5). Therefore, additional training on ground truth upholstery match dataset is needed to overcome these challenges and learn material similarity.

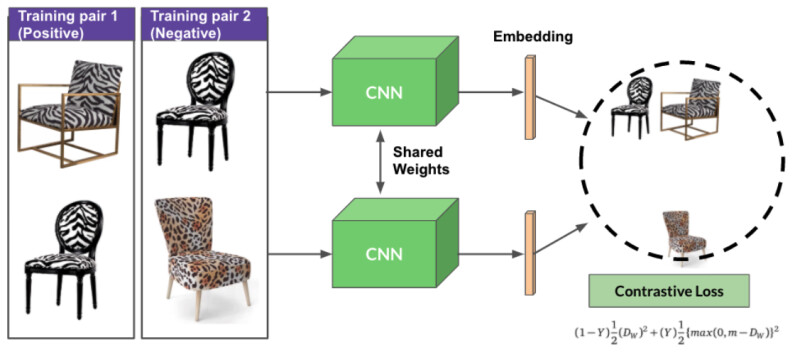

To address this issue, we chose Siamese Network with Convolutional Neural Networks (CNN) as shared blocks to learn material similarity. Siamese Networks can learn to distinguish patterns and have been widely used for tasks such as signature verification or face recognition.[4] As shown in Figure 6, our Siamese model takes pairs of products as model input. The upholstery material bounding box of each product in the pair will pass through the same CNN block to generate an embedding that represents the current material.

For our upholstery matching model, we performed transfer learning on baseline ResNet-50 as our CNN block. We used contrastive loss as the objective function which forces similar textures to come closer and different textures to go farther in the metric space. For example, if a similar pair is passed through the model in training, the Siamese model will adjust parameters to decrease the distance between embeddings (Figure 6 pair 1). Distance will be increased when a dissimilar pair is passed (Figure 6 pair 2). Through training on numerous similar and dissimilar pairs, the parameters of the Siamese model will be optimized to produce a material embedding space. Then we performed Approximate nearest neighbor to find the nearest neighbor matches in the embedding space [5].

Evaluating model performance

We optimized upholstery matching model performance through hyperparameter tuning and achieved 45% matching accuracy which is 3.5 times of the 13% accuracy by our baseline model. This improved matching accuracy can significantly contribute to material reuse by artists.

With the optimized upholstery matching model, we generated embeddings for products in the catalog and performed ANN to find nearest neighbor matches within the catalog. Figure 7 shows some sample matches from our baseline and upholstery matching models among products in the catalog. Compared to the baseline model, the upholstery matching model produces color and texture matches with high quality and does not focus on high level features. These matching products can be provided for artists to create a general and reusable 3D material. Our model was deployed in production and now refreshes matching results to cover new SKUs added to our site on a weekly cadence. We continue working with artists to collect more data to train models and further improve model performance.

Matching Metal Materials

Unlike upholstery, metal materials do not have labeled data indicating whether two materials are a match. Further, compared to upholstery there are relatively fewer features that determine reusability e.g. gloss, reflection, finish, and color. As a result, we took a different approach to identifying material matches between metals.

Unsupervised Methods for Metal Similarity

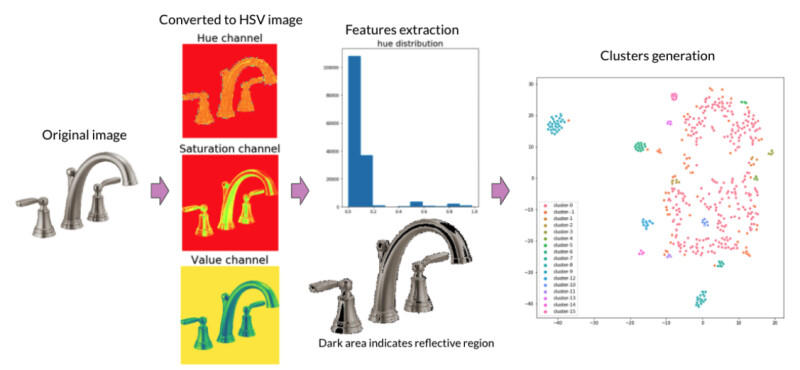

Our metal cluster generation pipeline for all-metal products (still under development) consists of two parts: feature extraction and cluster generation as shown in Figure 8. The first step in the pipeline is to convert silo images from RGB channels to HSV channels. HSV stands for Hue, Saturation and Value and it is designed in a way such that it is more closely aligned with human perception of color, therefore mimicking how humans recognize similar materials. To extract color features, we calculated Hue channel (H) distributions and RGB mean values. To detect reflection, we identified areas with high values (>0.8) in the Value channel (V) and low values (<0.2) in the Saturation channel (S) as reflective areas. A reflection ratio was defined as the percentage of reflective area in the whole product image [6]. With extracted color and reflection features, DBScan was utilized to generate clusters based on pairwise hue distance matrix (based on Kolmogorov–Smirnov test statistic) followed by additional cluster splitting using reflection ratios and RGB mean values. For new products, we calculate feature values and assign them to the nearest cluster. If distances between new products and current clusters are too large, we treat them as potential new metal materials.

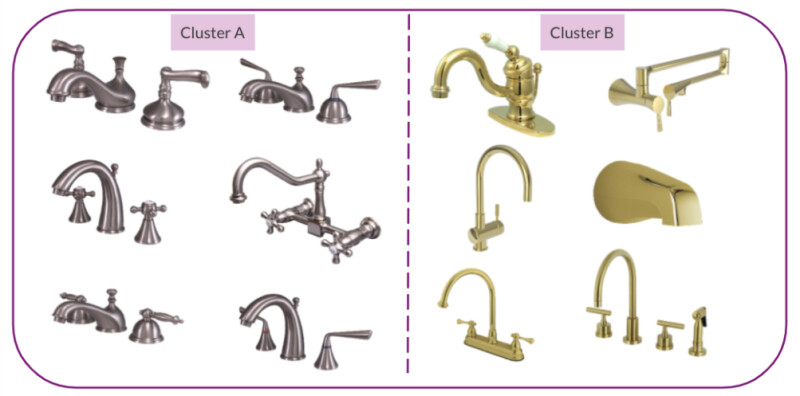

Figure 9 shows some sample clusters generated from our model. Cluster A contains steel products with high reflection and cluster B contains golden products with medium reflection. With these clusters, 3D artists can create new metal materials by slightly adjusting existing similar materials within the same cluster rather than starting from scratch, which can decrease 3D metal material creation time.

The current model only covers all-metal products. We are working on collecting high quality image crops that can capture metal areas for products with multiple materials besides metal. Further, we are working with artists to evaluate metal material clusters and further improve our models.

Future Work

In the future, we will continue improving our material matching pipeline especially regarding data quality and volume. Curating high-quality training data that represent various properties of different materials can better train ML models to learn material similarity. In particular, it is a challenging task to obtain metal image crops that can represent the metal features such as reflection and gloss. Besides, we are interested in designing algorithms to segment materials automatically from product images and further improve model performance through selecting hard negative product pairs and leveraging triplet loss in model training. Furthermore, realtime deployment in production such as services is desirable for artists to consume model matches when they create 3D models.

References

[1]. J. L. Suárez-Díaz, S. García, and F. Herrera, “A Tutorial on Distance Metric Learning”, arXiv:1812.05944

[2]. Q. Cao, Y. Ying and P. Li, "Similarity Metric Learning for Face Recognition," 2013 IEEE International Conference on Computer Vision, Sydney, NSW, 2013, pp. 2408-2415, doi: 10.1109/ICCV.2013.299.

[3]. K. He, X. Zhang, S. Ren and J. Sun, "Deep Residual Learning for Image Recognition," 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, 2016, pp. 770-778, doi: 10.1109/CVPR.2016.90.

[4]. C., Davide (2020), "Siamese neural networks: an overview", Artificial Neural Networks, Methods in Molecular Biology, 2190 (3rd ed.), New York City, New York, USA: Springer Protocols, Humana Press, pp. 73–94, doi:10.1007/978-1-0716-0826-5_3, ISBN 978-1-0716-0826-5, PMID 32804361

[5]. Yu A. Malkov, and D. A. Yashunin. "Efficient and robust approximate nearest neighbor search using Hierarchical Navigable Small World graphs." TPAMI, preprint: https://arxiv.org/abs/1603.09320

[6] A. Morgand and M. Tamaazousti, "Generic and real-time detection of specular reflections in images," 2014 International Conference on Computer Vision Theory and Applications (VISAPP), Lisbon, 2014, pp. 274-282.