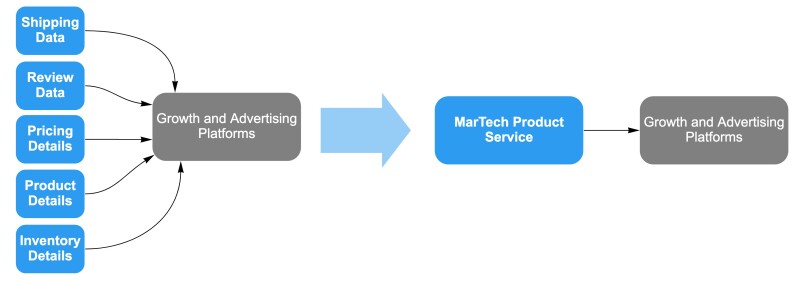

Advertisements are a key aspect of Wayfair’s marketing and growth strategy. Powering each of these ads is a plethora of data - product name, description, price, quantity - all used to maximize RoAS (Return On Advertising Spend) and present the highest quality ads to potential and returning customers. As one might expect, managing this data is no easy task. Different teams handle different subsets of data, and each has its unique platform and way of providing access.

As a response to this situation, the MarTech Product Service was founded. Our goal? To streamline access to all product data for the Wayfair Growth and Advertising Platform (GAP) organization, whose goal is to build all of the technology that enables Wayfair to advertise at scale.

A Look Into the MarTech Product Service (MPS):

Platform Vision and Features:

The MPS team was founded with the following vision in mind: To provide GAP teams with a scalable event-driven source of truth for product data. In doing so, we would enable them to utilize accurate, customizable, and timely data to best fulfill their respective missions.

Unpacking this vision statement further, the platform’s features include:

- Scalable: Utilizes microservice architecture that allows for a "plug-and-play" approach to adding new product data attributes and services via Kubernetes

- Event-driven: Utilizes Kafka streaming as the source of event-driven changes

- Source of truth for product data: Provides GAP with a single system that reduces the complexity around accessing product data

- Accurate (data): Provides Microservice apps that monitor data quality and allow for ad-hoc data refreshes

- Customizable (data): Provides multiple methods of accessing an aggregated view of all product data, including:

- Realtime access via GraphQL

- Event streaming changes via Kafka topics

- Historical log of changes via BigQuery for big data analysis

- Timely (data): Provides strict SLA’s monitored via Datadog

Platform Architecture:

The best way to visualize and understand the platform architecture is to examine the lifecycle of a product data change event within MPS.

Lifecycle of a Product Data Change Event:

Step 1: Upstream Publishing:

Product data changes begin when an upstream team (owners of specific data attributes) publishes events to specific Kafka topics. Kafka is Wayfair’s messaging and streaming platform of choice because “Kafka has better throughput, highly reliable, built-in partitioning, replication, easily scalable, and fault-tolerance, which makes it a good solution for large scale message processing applications.”

Due to variation in integrations with multiple upstream teams, data in these Kafka events can vary. In some instances, it is “fully enriched” (all of the necessary data is present), while in others, it is only an event change notification, with the latter requiring additional data enrichment during later steps.

Step 2: Intake and Processing:

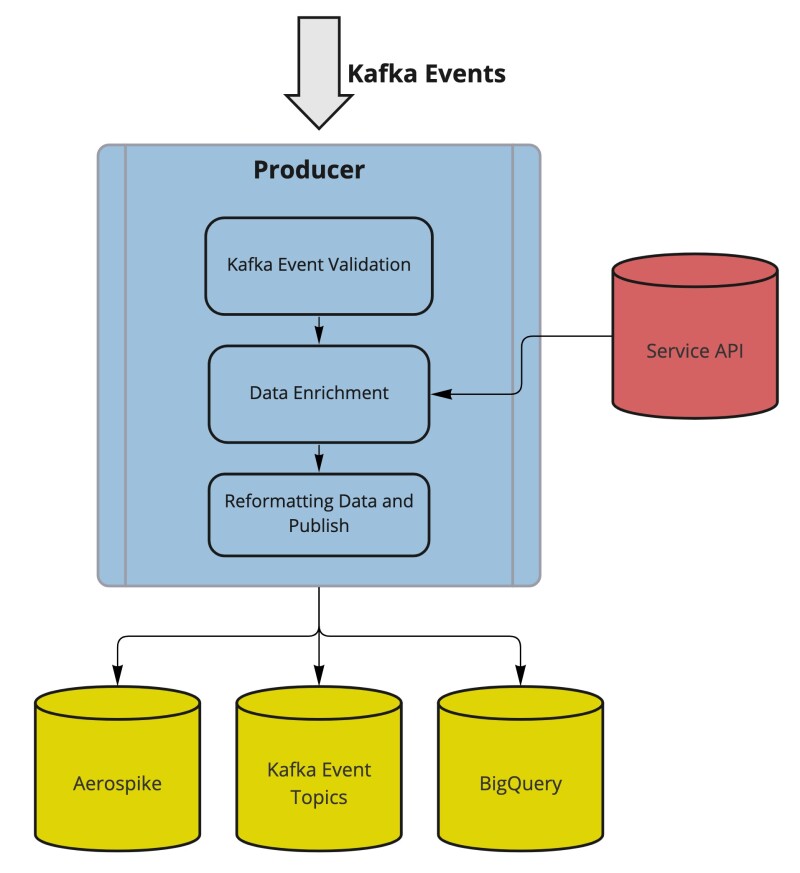

Once product data changes are present in Kafka, MPS begins by consuming these messages via Producers. Producers have the dual function. First, they consume data from Kafka topics. Next, they process and produce these event changes to downstream services. Producers are Kubernetes hosted Java applications that utilize Java and Akka streaming and Guice framework for dependency injection. Producers were originally created using Java streams but are being migrated to Akka due to their asynchronous stream processing ability, scalability, and general ease of use with the Akka Streaming Library.

“Plug and Play”

Since each Producer corresponds to one Kafka topic, a “plug and play” approach is attainable by the creation of an additional Producer for each Kafka topic (and associated attributes) that is consumed. This enables it to quickly scale the amount of product data attributes when requirements or use cases change.

“Processing and Producing”

Once Kafka events are consumed, the producer begins processing data with the following steps:

- Validation: Kafka events are validated against Avro schema in the case of any data corruption.

- Enrichment: If Kafka events are event change notifications, it is necessary to query a Service API to enrich the rest of the needed data. These Service APIs are owned and maintained by their respective upstream teams.

- Publishing: Events are reformatted and published to:

- Aerospike Cache

- Kafka Event Topics

- BigQuery

We chose Aerospike due to its high performance, availability, scalability, ease of development, and high level of support and tenure at Wayfair. It is able to easily support the 80 million+ writes per day average that producers process. It is also capable of supporting a peak number of 200 million writes during periods of high traffic. Similarly, BigQuery has a high level of support at Wayfair and “delivers consistent performance on large datasets with an easy API, making it suitable for a wide range of analytic tasks.”

Step 3: Client Consumption

Once stored, clients can access data via the MPS service layer of GraphQL, Kafka, or BigQuery based on use cases.

GraphQL:

Instead of allowing direct access to the Aerospike cache, a GraphQL service is used as an intermediary to allow for better query customization. GraphQL specializes in providing clients with the data they request and nothing more. The current SLA for batches of 200 products is < 300 ms.

Kafka:

Kafka is available for clients who need to consume events in real-time and wish to utilize event-driven architecture. Each event contains fully enriched and formatted data via Avro schemas, which is an important benefit that MPS provides. This eliminates the need for clients to reach out to multiple services and enrich data for skeleton change events.

BigQuery:

Every event change process is available in BigQuery tables, which are ideal for big data analysis.

Side Steps: Data Quality

As mentioned above, accurate data is of the utmost importance to the MPS platform. To ensure data quality, two standalone microservice applications, Data Validator and Rewarmer were created. Both are Java applications and are hosted in Kubernetes.

Data Validator

- Periodically checks a subset of data within the Aerospike Cache against Service APIs

- Reports findings to Grafana Dashboards and Datadog Alerts

Rewarmer

- Ability to trigger specific or entire data refreshes on an ad-hoc basis when issues with data quality arise

- Publishes messages to Kafka topics which are then consumed by Producers

Platform Diagram

Impact & Stakeholders:

For the past 2 years, MPS has been serving several mission-critical systems within the GAP organization. Our key consumer is Product Data Feeds which consume both Kafka events and GraphQL service MPS to power the generation of advertising feeds. The feeds system ensures that appropriate parts of our product catalog are available to key advertising partners such as Google with extremely low latency and high accuracy. Product Data Feeds handle over 100 feeds with more than 600 million items added and 125 million calls across all Feeds each day.

Other clients include the Customer Communication Platform, which consumes Kafka events to trigger back-in-stock emails, and the Notifications Platform, that utilizes GraphQL service for price-related data.

Conclusion:

MPS is an event change processing and notification platform that is key to delivering and streamlining access to real-time product data for teams within GAP. As we look forward, we have plans to expand the platform and support new use cases. We also want to continually scale the number of product attributes supported and are constantly looking to squeeze out more performance from the platform. If you are interested in helping in these areas and tackling exciting challenges in large-scale streaming systems to solve critical business problems, Wayfair is the place for you.