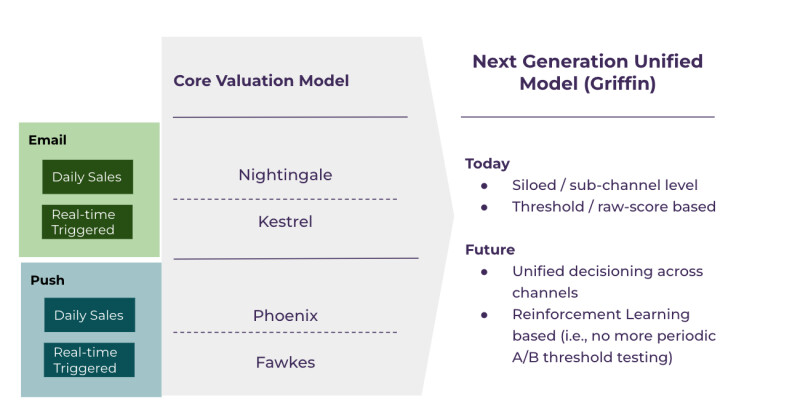

Every day, Wayfair sends millions of marketing emails and push messages to communicate with our customers around the world. For both of these notification channels (emails and push messages), we have two different types of notification: 1) daily sales updates and 2) customer behavior-triggered notifications. Daily sales updates are batch-scheduled messages that we send to customers to promote our products, while triggered notifications are sent in real time after customers have certain web activities, like leaving products in their shopping cart without checking out.

Previously, we built four separate machine learning models to make separate send decisions - one for each combination of channel and type of notification. This made it challenging to manage different models for such a large customer base. For example, we occasionally sent duplicated content across notifications to some customers. To improve customer satisfaction, increase engagement, and make good send decisions across channels/types, we developed a notifications governance model called Griffin. Griffin consumes our existing model signals, plus new content signals, to make the final, coordinated send decisions. The first generation of Griffin uses reinforcement learning and primarily supports real-time, customer behavior-triggered email. Its scalable design enables future cross-channel sending decisions across all notification types.

Why use Reinforcement Learning?

The primary technique we use is Reinforcement Learning (RL) with Contextual Bandits. Reinforcement Learning is a technique that balances exploration and optimization when making decisions. It leverages rewards from both optimized and randomized decisions to update the RL model.

To translate the academic terms into our business case, it means that Griffin will make a percentage of the best actions (send/no send notifications) and a percentage of randomized decisions (for example, trying 20% of decisions opposite to the model's optimized decisions) in our daily notification environment. We will collect customer feedback (clicks or unsubscribes from our notifications) and use this information to update our Griffin model and continue the learning cycle.

The major benefit of using the RL algorithm in our business case is that we no longer need to perform repetitive model retraining and A/B testing in the future. Currently, we have four supervised ML models to make notification sending decisions in our production environment. However, we need to retrain the models periodically to avoid model decay problems, and we perform repetitive A/B testing every time we need to change the model architecture or simply tweak some business rules in order to understand if the retrained models are significantly better than the old ones. An RL model, on the other hand, automatically updates its decision-making based on rewards from a set of randomized actions, and we use Offline Policy Evaluation (OPE) to estimate the performance difference between the updated model and the previous model. The continuous exploration feature of the RL algorithm, where the RL model makes explorative actions to capture new signals, also helps us rapidly capture changing business environments without restarting the entire model retraining and A/B testing process.

Griffin Model Structure

When designing Griffin, we considered four key factors:

- The actions we want to take (types of notifications to send),

- The decision-making level (on per customer or per email),

- The features we should incorporate, and

- The reward function that will be used to evaluate the model's performance.

These four factors are interdependent since we need to determine the best way to leverage various feature types and ensure the model structure remains flexible. Rather than making decisions on only customer or email-type, it is more flexible and powerful to make sending decisions on each notification level, so that each customer could receive different sending decisions on different types of notifications. It also enables personalized send decisions, ensuring that the right content reaches the right customers. And after we select the appropriate sending decision structure, we can select the right features to fit in the model and decide the reward functions accordingly.

Decision Making Actions and Features

To improve send decisions at the notification level, we incorporate individual notification context (marketing campaign information, triggering behavior, etc.). This approach creates flexibility in that we can include multiple notification types in the same model. Figure-3 is an example data schema:

Reward Function

The ultimate goal of Griffin is to optimize our notification sending decisions and to send customers the most relevant communication. To achieve this goal we will optimize some long-term metrics (for example, overall customer conversion rates). However, measuring such metrics can take more than six weeks in some cases, and the impact of Griffin over such a long time frame is difficult to separate from other changing on-site factors, such as personalization algorithms and product page layouts. Therefore, Griffin’s reward function uses email engagement metrics (metrics that can be observed in an email/push session, like clicks and unsubscribes), as a more convenient alternative.

This choice of reward function provides rapid feedback, and allows us to update our model quickly and more frequently. Fortunately, our previous email and push message research showed a strong correlation between email engagement metrics like clicks and unsubscribes, and long-term metrics like overall customer conversion rates. This gives us confidence that email engagement metrics are appropriate leading indicators to make decisions with.

Expanding Griffin to Daily Sales Emails and Push Messages

After building Griffin for customer-triggered emails, it will be easily expanded to apply to daily sales emails and to push messages. In particular, the flexible input data schema allows us to provide the appropriate customer and email context for these different types of marketing campaigns

Ultimately, this will allow us to unify our send decisions across channels. Once Griffin is learning from all different types of notifications, cross-channel optimization becomes an implicit part of Griffin’s decisions.

Engineering Deployment

Griffin requires that our engineering architecture supports real-time scoring with a combination of real-time features and batch / pre-computed features. Prior to the arrival of scheduled emails or push messages, we use an Airflow DAG to generate batch updated features, which may include aggregations like emails clicked from the past 30 days, for example. In order to make the most informed real-time send decision, some real-time or near real-time features are also required, such as how many emails a customer has received in the past hour. You can learn more about Wayfair’s feature-serving platforms in the Hamlet blog post.

Additionally, we built a reward logging table and monitors to track multiple KPIs in real-time. These KPIs range from engineering requirements such as latency and error rates to business metrics such as sending rates and click-through rates. We also monitor model performance metrics, such as offline testing scores.

The Journey has Just Begun

There is much our team and our business and engineering partners learned since we began building the Griffin model. It's the first time we've built such a large reinforcement learning model to support millions of real-time notifications every day.

To recap, we currently have four types of notification channels: daily sales emails, daily push messages, customer behavior-triggered emails, and customer behavior-triggered push messages. While Griffin currently supports only triggered emails, our next step is to expand its capabilities to encompass all four types of notifications within a single model.

Having a unified sending decision model across these four channels allows us to maximize the model's capacity to drive customer engagement and reduce unsubscriptions. By learning from customer reactions across different notification channels, we can prioritize the most engaging type of notification for each customer, while also avoiding sending low customer engagement driven content notifications.

Overall, it's always exciting to solve new challenges, and we'll keep experimenting with new methodologies to deliver email and push content that delights and inspires our customers, helping them find their favorite products effectively.