When you think "Kubernetes," the first word that comes to mind probably isn't "frontend." Here at Wayfair our frontend infrastructure is heavily integrated with Kubernetes, including a toolkit that generates frontend apps with Kubernetes integration out of the box.

We have pipelines for both static and server-side rendered (SSR) apps, in this post I'll be focusing on some scaling issues we recently tackled on the Product Details page (PDP), our highest-traffic SSR app.

A little context: We write React on the frontend. For customer-facing apps like PDP we render it server-side using a service called `rndr`. An instance of `rndr` runs in a Docker container, and we use kubernetes (AKA k8s) to orchestrate these containers in pods. K8s load balances between pods, and allows each app to define the amount of pod replicas it needs to serve traffic. Not only that, but it can scale pods automatically, adding or removing replicas to keep CPU, memory, and other metrics within a certain range.

The problem

A few months ago we started seeing errors from `rndr`, mostly from PDP, but they were a bit mysterious. We had traffic spikes at regular intervals with the `rndr` errors showing up at the same time, telling us the service was timing out. Timeouts would cause `rndr` to crash, falling back to client-side rendering only, which slows down the customer experience. At some point it got noticeably worse, with spikes happening regularly in one of our bot-heavy datacenters. This got particularly bad overnight (when normal traffic was low) and we would regularly see 5x-10x traffic spikes.

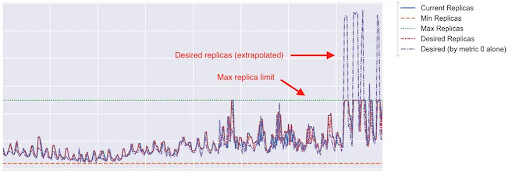

Kubernetes is great at scaling pods, but we realized it's not great at scaling quickly. By the time it scaled up to handle the traffic from the spike, the spike was over. This got even worse in some situations where traffic would spike, k8s would scale up, overcompensate, scale back down, overcompensate and get into a loop maxing out replicas and resources.

We (Frontend Platform) worked with PDP and the k8s team to figure out what we could do and decided in the short-term to allocate more pods to PDP than necessary while we figured out how to scale more quickly/appropriately.

The approach

We approached this problem on two fronts:

1. Add workers

`rndr`'s rendering process is synchronous, which means if there's only one thread and it's busy, the next request in line has to wait until the first one is finished. The `rndr` service handles many concurrent requests just fine, but if those requests line up in a way that multiple renders are trying to happen simultaneously, the result is a queue of synchronous work.

If this queue becomes deep enough, the response will time out and error.

Nick's idea was: Create worker threads to parallelize rendering. This seems a little strange in a k8s context, since k8s is designed to parallelize work with replicas, but the theory was that workers would be an escape hatch during a sudden influx of work, and then k8s would take over parallelization after it had time to scale up.

There was one problem: worker threads need CPU, so we weren't sure if adding workers to pods that were already struggling with CPU would make things worse. On a high-traffic site like PDP the stakes are high. We needed a way to adjust CPU without affecting autoscaling, which leads us to...

2. Scale on incoming requests

CPU seemed like an indirect scaling method for `rndr`. We were seeing CPU spikes when requests would pile up, but we thought scaling on incoming requests would have a more immediate effect. It turned out the difference in metrics didn't equate to faster scaling, but there's another important reason to scale on requests: it allows us to balance autoscaling and CPU resources using separate levers.

Kubernetes lets you adjust how many CPU cores you allocate to each pod. It also lets you autoscale based on CPU. Two great tastes that taste great together, except when you want to adjust one of those tastes without tasting the other taste.

Our CPU settings were coupled to our scaling, so we couldn't give the pods more CPU (for example, when we wanted to add worker threads) without affecting the number of replicas, and vice versa.

Basically: Adjusting one would have an unknown effect on the other, so making any adjustments was risky.

The long and winding road

The first thing we looked at was a custom scaling metric using Prometheus. Prometheus is a tool that gathers metrics for k8s services, which you can then use for autoscaling. We gather CPU/memory metrics by default, but we can also expose our own custom metrics, so we thought "let's use active renders."

On the Frontend Platform we were woefully inexperienced with Prometheus, so the first step was to investigate how to interact with it and research best practices. I'll save the details of that story for another time.

For now I'll just say we got scaling based on active renders working, but realized it would be tricky to separate the synchronous processing time from time spent waiting for GraphQL requests, and slow requests could cause us to scale up much higher than necessary.

Along the way, we got a lot of advice from the k8s team - they were super helpful and we would have spent way more time on this without them. They pointed us to an existing metric that counts incoming requests, which we used as a proxy for active renders. After realizing the problems with `active_renders`, we realized incoming requests would work great not as a proxy but as the autoscaling metric itself!

We added two custom metrics which would work in tandem to handle spikes. The primary metric is requests per second, the second metric looks back at an average of requests over the previous N minutes, scaling back down gradually after the spike is over. If you're curious, we used Holt-Winters smoothing for this, these are the queries we used for the primary metric and smoothing:

The result: Scale up quickly, scale down slowly.

At the same time, we made changes that would allow apps to configure the number of worker threads in `rndr`, with the option to have threads dedicated exclusively to bot traffic.

Now we had a way to scale independent of CPU, and a way to handle synchronous rendering with workers, all that was left was to hook it up to the moving freight train that is PDP.

So... how did it go?

I'll hold you in suspense... Did it solve all our problems? Did it go off the rails, taking down Wayfair and deleting all customer data? Keep reading to find out!

Nah, it went great. You probably could have guessed by the title of this post.

After our Prometheus exploration and all of the k8s team's help, we walked through these changes with a PDP engineer. We wanted to pair with them live, partly to transfer this knowledge so they would be more comfortable making future changes, but also partly because we wanted to see for ourselves how these changes would look when we rolled them out on the scale of PDP.

We rolled out the changes in a few steps, and so far things are looking good. Latency was reduced across the board, and the `rndr` error rate is almost zero. We can make adjustments to CPU, memory, and scaling independently. We're now in the process of applying these changes to a few other apps and potentially adding them to the base config so they'll be enabled by default for all new SSR apps.

Huge thanks to Nick Dreckshage, Nicholas Lane, Nick Hackford (that's a lot of Nicks) and Tim Kwak!