Introduction

At Wayfair, data science and machine learning (ML) teams are increasingly utilizing machine learning operations (MLOps) to create and productionalize models. Due to the intricate nature of the ML lifecycle, teams often rely on MLOps tools to streamline the process and manage the complexities involved. Most data science teams at Wayfair already use MLOps tools and workflows for model training and serving, but once a model is deployed there hasn’t been a straightforward solution for tracking data quality and drift.

Earlier this year, Wayfair chose Arize as its model monitoring solution. To make the most of the capabilities Arize has to offer, the ML platform team at Wayfair worked to make it easier for data scientists to get their models into Arize. In this article we’ll talk about the benefits and challenges of MLOps adoption, introduce a pattern for self-service monitoring setup, and give an overview of model monitoring metrics.

What Is Self-Serve Onboarding?

Generally MLOps refers to systems for storing, serving, and monitoring datasets and models for machine learning. At Wayfair MLOps tooling is leveraged when a trained model is ready to go into production. The ML platform team builds tools to accommodate the wide range of approaches data scientists take to source data and train models. Because MLOps tools need to be flexible, it’s difficult to create one-size-fits-all approaches that can easily be plugged into any workflow.

In this context, self-serve onboarding refers to tools that teams can quickly configure to meet their needs. Self-serve workflows are flexible enough to accommodate a wide range of use cases, and comprehensive enough to greatly reduce the work teams need to put in when adding new capabilities. For a workflow to be self-serve, teams should have all the information needed to configure tools to fit their own unique data and modeling approach.

Barriers to MLOps Tools Adoption

There are many teams across different domains using ML to drive business value at Wayfair. Historically, data science teams were largely left to their own devices and given flexibility when developing best practices for training and releasing models.

As a result, each team approaches model development and deployment in a slightly different way. Some teams may be more slow and methodical, while others may need to quickly iterate and try many feature sets and models before settling on the best options. Since MLOps as a domain is relatively new, centralized ML platforms need to be built to accommodate a huge range of model types and workflows.

In this new and evolving space, it’s hard to build things that fit well with every approach. If it’s difficult to incorporate platform tools into existing deployment workflows, teams may not be motivated to change their approach. While teams may recognize the potential value of utilizing low-maintenance, ready-made MLOps workflows, the process of re-orchestrating and migrating existing solutions takes time. Often teams are under pressure to focus instead on improving model performance and tackling new use cases.

On the ML platform team, it’s our responsibility to make the modeling and productionalize process easier and more repeatable for the whole organization, which often means meeting teams where they are. It’s important to make sure data scientists can leverage the best tools and frameworks without completely overhauling their approach.

The ML Platform at Wayfair

Wayfair’s ML platform team actively develops and maintains several services to help teams productionalize their models. When making a self-service tool, the team tries to create a basic framework that makes releasing a model as a new service very simple without adding constraints to what teams can build. The main offering in this respect is a templated model handler and deployment service.

When a data scientist begins working to release a new model, they can go to an internal portal and fill out information about the model. When they’re ready to set up a new service for the model version, they can trigger the creation of a GitHub repo that provides model serving templates, orchestration settings, containerization, and buildkite deployment pipelines.

In the repo, teams fill out a model handler class with steps for loading a model on deployment, and producing predictions for each request. The handler is designed to be structured enough that teams can concentrate on sourcing features and outputting results, while still being open-ended enough that teams can add complex logic and configurations to fit their use-case. It abstracts away the details of deploying and running a model in a scalable online service, so data scientists and ML engineers can focus on features and predictions.

Wayfair’s Path To Self-Service Model Monitoring

Since model monitoring is a vital component of MLOps, Wayfair’s ML platform team is working closely with Arize AI to develop a long term strategy around onboarding. The Arize framework is designed to ingest rows of structured data, but our team has found that models aren’t always designed in a way that makes it easy to get their features and predictions into this format. To make things more complicated, sometimes the data needed for monitoring isn’t stored anywhere in the format the model uses, or is dispersed across several tables.

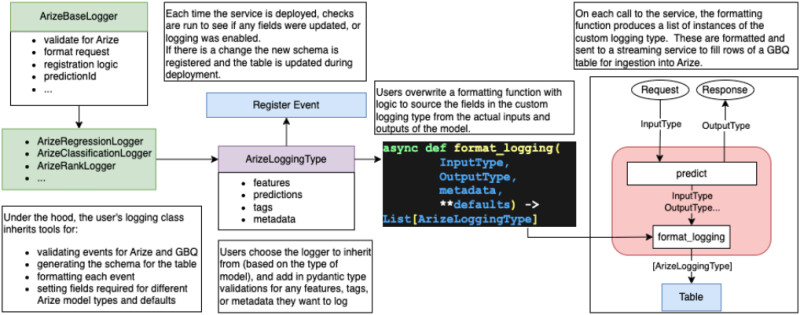

To provide teams with an easier path to model monitoring, the ML platform team at Wayfair developed a new logging tool that is integrated into the templated model handler. Each time the service gets a request, a new step takes in the inputs, outputs, and metadata, pulls out the model features and predictions, and sends them to a table for ingestion into Arize.

As teams fill out the model handler, they fill in fields for a class that is used as the schema for the ingestion table and write a function to source a list of class instances from the inputs and outputs to the service. The rest of the logic – including to register new tables, validate the formatting for Arize, and send a set number of rows asynchronously to a table – is built into the service.

Important Monitors and Dashboards

Once data is ingested into Arize, implementing monitors and dashboards help to maintain model health in a production environment.

There are several useful types of monitoring that teams use to help track model performance and identify when a model needs to be retrained:

- Model evaluation metrics (when ground truth is available)

- Prediction drift

- Feature drift (top K features)

- Data quality (top K features and model output)

These monitoring elements assist in detecting most potential issues. Additionally, data scientists often establish their own bespoke monitors for drift, performance, data quality, or any other unique metrics relevant to their model.

Configuring the correct thresholds or evaluation windows for these monitors may require several iterations; however, once initially set, it's crucial to establish the right alert mechanisms through services like Slack, PagerDuty, or Opsgenie.

Alongside configuring the right monitors, establishing dashboards for the model is equally important for visually tracking the model’s performance and feature evolution over time. Arize, for example, offers several templates to get started.

The dashboards we recommend include:

- Feature Dashboard - Such a dashboard can offer profound insights into the distribution of your features and shed light on the features potentially influencing the model's output.

- Performance Dashboard - This type of dashboard can assist in tracking crucial business or technical KPIs, alongside the model’s performance metrics such as AUC or F1.

Conclusion

The concept of self-serve MLOps tooling brings a new dynamic to ML projects by providing the means to automate and streamline formerly time-consuming disparate processes. A well-structured ML platform is the backbone of this setup, facilitating the smooth transition of projects from inception to completion.

While setting up self-serve onboarding takes time and discipline, Wayfair’s example shows what’s possible when lowering the barriers to MLOps tools adoption.

Overall, the goal is to simplify and democratize the ML process by making it more accessible and efficient for data scientists and ML engineers. In adopting these best practices, we move a step closer to the future where modern MLOps becomes a standard for organizations venturing into machine learning.