Introduction

Understanding the time-varying intents and preferences of Wayfair’s customers is crucial for many of our marketing team’s activities. These activities include deciding bids for online searches, curating relevant content on the homepage, personalizing email content and many more. To accomplish this goal, Wayfair’s Marketing Data Science team provides an ecosystem of customer-centric machine learning models and decision engines that work together to help us understand a customer’s needs, what makes them unique, and where they are in the consideration cycle. These are generally classification models trained at a regular frequency using the most recent 12 months of historical data about our customers, such as what they are interested in buying, and what pages they most often visit. The raw model outputs rank customers’ likelihood to perform an action. For example, our Intent Model produces estimates for whether a customer will purchase from Wayfair, and our Functional Needs Models (FN models) capture the likelihood that a customer will purchase specific kinds of products. These model outputs, however, do not represent actual probabilities that customers might purchase, and do not incorporate information on the timing of purchases.

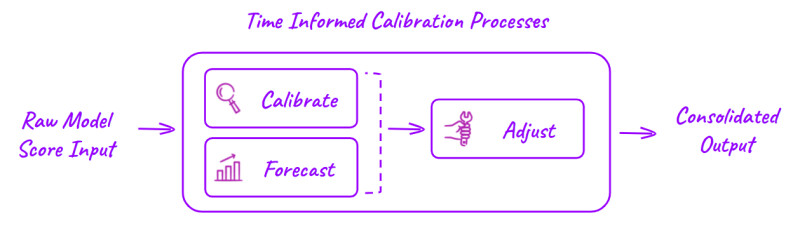

Time Informed Calibration (TIC) addresses these two problems. It extends the capabilities of our current machine learning models by converting the raw model outputs to realistic probabilities of customer action and adjusting these values based on timing. These capabilities allow us to provide more interpretable and accurate estimates of customers’ preferences and, in turn, improve the efficiency of our marketing activities.

Problems

Are raw model outputs enough?

The raw outputs (or scores) from our machine learning models are extremely good for ranking customers’ likelihood to perform an action, but they do not tell us how many actions we can expect from customers. This is because we oversample positive labels to balance classes during training, and because of the ways various classifiers are trained (see here). In the case of the FN models, a model output of 0.5 for area rugs does not mean that every other customer with that score would end up purchasing an area rug, even though we do know that customers with a score of 0.4 are less likely to purchase. The rankings themselves might be sufficient, if, say, we simply want to target the top 1 million customers who are the most likely to purchase. However, we frequently need a better understanding of customer behavior. For example, we might want to understand how many orders we expect to get from these customers to gauge the effectiveness of our marketing campaigns; we might also want to know how much a customer wants to purchase an area rug compared to other functional needs (e.g. Christmas trees or patio furniture), so we can decide on the most relevant content to show to that customer. For these applications, we need true probabilistic representations of these customers' likelihood to purchase. To solve this problem, we use a calibration process to provide a mapping from the raw model output to the purchase probabilities using historical order information.

What about expected changes?

Our calibration process relies on information from the past, but the predictions we need to make are generally for the future. In the case of the Intent Model, we might be able to understand how the raw model output translates to probabilities of purchase using actual order information from the past month, but what if we expect tomorrow’s sales to deviate far from the past month’s average? How would we then make plans for how much to bid for a certain customer tomorrow when the sales numbers are not there yet?

This is a problem for our models because Wayfair’s sales vary greatly from day to day. The plot below shows an illustration of the typical daily sales history at Wayfair. We see strong weekly seasonality (e.g. customers shop more on weekends and less on weekdays), impacts of annual sales holidays (e.g. Way Day, Wayfair’s biggest annual sales event, and Black Friday), effects of large one-off societal events (e.g. COVID), and potentially other factors such as growth and longer-period seasonalities. Our model predictions need to incorporate such information so we can accurately plan for marketing activities. To solve this problem, we use a time series model to predict sales numbers for the future, and adjust our calibrations accordingly.

Methods

Calibration

The calibration process we use is very similar to sklearn’s calibration implementation. Let’s again take the Intent Model as an example. We first obtain some past raw model score output for Wayfair’s customers to form a rank-ordered list of how likely customers are to order from Wayfair. We then break up this rank-ordered list of customers into small groups (or bins) each containing the same number of customers (say, 1000 customers in each bin). Customers in each bin can be considered similar for the sake of understanding how likely they are to order. For customers in each of the bins, we can look at how many actually ordered within some time period after they were scored, and calculate the actual purchase (or conversion) probabilities.

At this point, we would have the data needed to form a calibration curve. The figure below shows a typical calibration curve when we plot the raw scores on the x-axis against the average conversion per bin on the y-axis. As you can see, while we generally expect a higher conversion probability associated with higher ranked scores, the binned calibration data do not always form a smooth curve, indicating that this isn’t always the case. This is because of the small sample of conversions at the lower range of raw scores and the fact that no model is perfect! In order to remove the jaggedness, we fit a heuristic function (such as an exponential function) which forces a monotonic relationship between the raw score and our now calibrated conversion probability (i.e. monotonic in that a higher raw score is always associated with a higher calibrated probability, so that the rank order of customers is preserved). This fitted curve can then be used to convert raw model output scores to actual purchase probabilities.

Forecasting

The forecasting process uses a time series model based on Prophet to predict the aggregate value of the model target. For the Intent Model, this target would be whether a customer purchases, and the aggregate value is the total number of orders at Wayfair. Briefly, Prophet is an additive model that fits a time series by considering trends, seasonalities (weekly, yearly), and shocks (holidays).

The default time series model we use assumes a linear growth trend of the target variable in accordance with Wayfair’s historical growth trend. As we mentioned above, it considers factors that are important for the sales at Wayfair, including yearly and weekly seasonalities of sales as well as other holidays as shock variables. In addition, regressors including the month of year, day of year, and the COVID period are included in the model, so we can capture other one-off and repeated events that might affect sales. Other model hyperparameters are determined during training by minimizing the mean average percent error of the forecast.

Currently, the forecast has been tested for both time series with similar characteristics to Wayfair’s sales trends and ones that have seasonal sales characteristics (e.g. Christmas trees). We evaluated the effectiveness of these forecasts by comparing their accuracy to that of a naive model where tomorrow’s forecast is simply today’s value. We saw that our time series model outperformed the naive model in common metrics including both the mean squared error and the mean average percentage error. This gives us confidence that we can rely on results of the time series models to improve the accuracy of our calibration.

Bringing it together

Now that we have both the calibration data and the time series model forecast, we can combine them to produce an adjusted calibration curve that incorporates expected future trends. In the case of the Intent Model, we produce model scores daily at the beginning of the day and use these scores to make our marketing decisions later in the same day. As such, we also calibrate the probabilities with the most recent sales number (i.e. from yesterday) and predict the sales number for today using the time series model.

An illustration of this process is shown below. We take two time series:

- The sales history + our forecast of the sales for today

- History of the calibrated probabilities corresponding to a raw probability bin (e.g. if we take all of the historical values of actual probabilities from the bin corresponding to a raw model score of 0.4, as shown in the plot above).

We expect these two time series to follow the same trend. Therefore, we can normalize these two time series to the same scale (e.g. both with a min of 0 and a max of 1), and translate the forecasted change in sales to the change in the calibration bin. If we do this for each of the raw probability bins, and fit to the adjusted calibration data, we can obtain a new calibration curve. This adjusted calibration curve now contains information on the expected changes in sales, and we can use it to produce the final time-based calibrated model output.

Implementation

The implementation of TIC relies on the methodologies we mentioned above, and it needs to support a wide range of models and use cases. For example, the FN model family contains more than 300 individual models. As such, we designed TIC so it has a few modular components as shown in the image below. TIC also satisfies a few guiding principles:

- Compatibility: TIC is compatible with a wide range of models that produce scores for different prediction targets. It is also able to provide robust time series models with default parameters that need little user tuning.

- Independence: TIC is independent of the model training pipeline so that we can train models using the best known features and methodologies without considering TIC requirements and still benefit from TIC.

- Flexibility: If users want to use one of the functionalities of TIC (calibration or forecast) without the final adjustment, they are able to easily access any intermediate TIC output and surpass steps that are not needed for their application.

Conclusions

TIC extends the capabilities of the current machine learning models at Wayfair so they can produce probabilistic output that incorporates information on expected changes. Through internal testing, we have already seen that model scores produced by TIC can improve the efficiency of marketing activities such as determining the best bids for ads on Google. We are also working on integrating TIC with more of our models and other ways to produce the best marketing material for our customers.