Wayfair maintains a catalog of tens of millions of unique SKUs, and we rely heavily on sophisticated search and recommendation algorithms to select just the right items to serve to every individual customer. Traditional recommendation systems leverage customer signals (pageviews, clicks, etc) to learn relevance, and bias results toward popular products - missing out on intuitive, latent signals such as style-compatibility. To address the challenge of efficiently and consistently identifying stylistic compatibility among millions of products, we’ve expanded our GenAI labelling capabilities and have built an LLM-powered pipeline on Google Cloud that annotates product style compatibility between pairs of products from images and metadata, enabling scalable curation for a catalog exceeding millions of items and unlocking significant improvements in search and recommendation quality. By iterating on prompt design with expert-labeled example pairs, we improved annotation accuracy by 11%. Those labels now help evaluate—and ultimately improve—the quality of our product recommendations.

Why style compatibility labels matter

Wayfair’s catalog spans tens of millions of unique SKUs, and shoppers don’t just want a sofa—they want to curate a space that feels cohesive.

Recent wins with using GenAI to automate product tagging for Product Style (e.g., “Scandinavian,” “Retro”) and other attributes have shown that a curated catalog can significantly boost conversion rates. Wayfair manages tens of thousands of product tags, ranging from objective facts like product dimensions, to fuzzier attributes like color, to more subjective ones such as “comfortable,” which are nonetheless fundamental to a good user experience. We are now using GenAI to verify and clean these tags, and to apply them in a consistent way across the entire catalog, making it easier for customers to find what they are looking for and improving the overall shopping experience.

Reliable style compatibility labels—pairwise judgments like “does this dining chair look right with that farmhouse table?”—also make search results, recommendations, and on-site curation intentional rather than random. Historically, we relied heavily on human annotation: accurate, but slow and costly. We frame this as a binary classification task: given two products, the model outputs one of two labels—“Yes” if the products match stylistically, or “No” if they do not. Our project was to automate this judgment with an LLM, preserving the design sensibility our experts bring while dramatically increasing labeling speed, scalability, and consistency—enabling style-aware personalization at scale.

What we built

Our core science task is a binary classification problem: given two products, determine whether they are stylistically compatible (“Yes” or “No”). To solve this, we use a multimodal LLM—Gemini 2.5 Pro. The model processes both product imagery and descriptive text (title, class, and romance copy), using structured prompts enriched with a few carefully selected examples to ground its style judgments. It outputs concise JSON containing the Yes/No label and a brief, design-aware rationale.

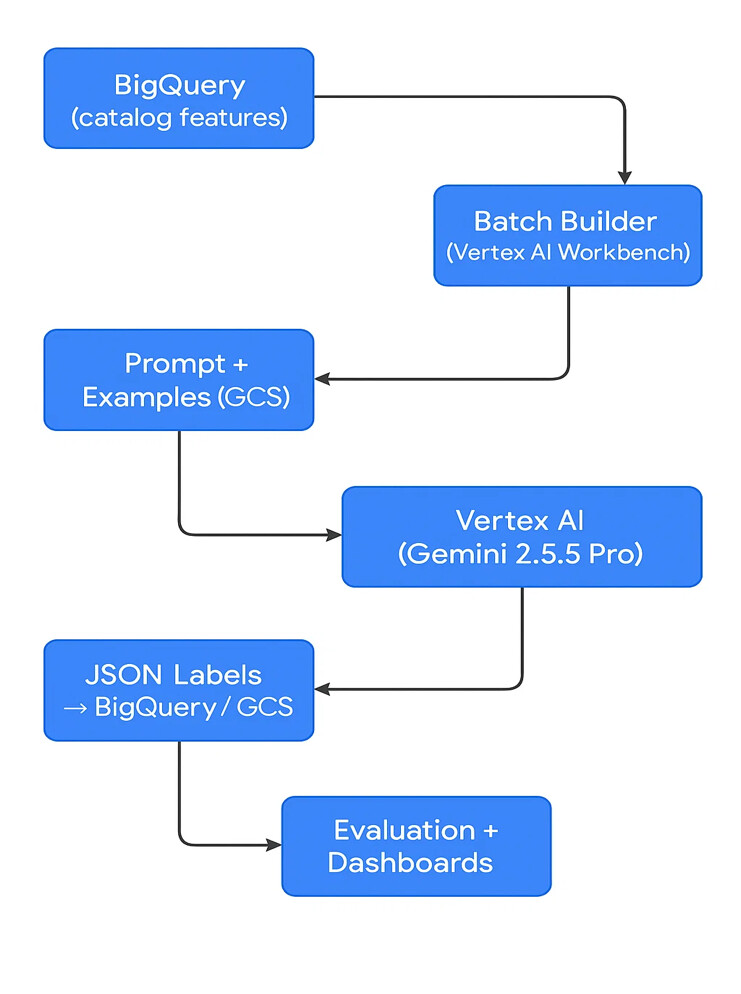

Separately, we designed a batch pipeline on Google Cloud to automate this process at scale. The pipeline pulls product imagery and metadata, builds the structured prompts, sends them to the model, and stores the results—enabling rapid, consistent, and scalable style-compatibility labeling that can be used to evaluate and improve recommendation algorithms.

We approached the style compatibility task by prompt engineering. Compared to higher-effort approaches like RLHF or DPO, prompt engineering offered a faster, lower-overhead way to test hypotheses and iterate quickly with domain-specific guidance. For the model, we selected Gemini 2.5 Pro after benchmarking several Gemini variants and open-source multimodal models—Gemini 2.5 Pro delivered the highest classification accuracy and integrated cleanly with our Google Cloud environment.

Our prompt design was deliberate and structured. We embedded detailed, interior-design-specific language—shape and silhouette, material and finish harmony, color palette and undertones, proportion, and scale—so the model’s reasoning aligned with how human experts think. We provided a small set of few-shot examples drawn from realistic catalog scenarios, including tricky edge cases. One key rule we introduced: products in the same category (e.g., two dining tables) can be compatible even if functional details differ (counter vs. standard height) as long as their style matches. Conversely, we instructed the model to return “No” when two items clearly don’t belong in the same room (e.g., coffee table + bathroom vanity). We added this rule to mirror the nuanced decisions human designers make—recognizing that functional variation within a category doesn’t break stylistic harmony, while mismatched functions across categories can disrupt an entire room’s cohesion.

How we measured progress

We evaluated the system against a hold-out set of expert judgments, treating the human labels as the ground truth and comparing the model’s Yes/No outputs to those from individual experts. While human annotators generally align well, there is still some natural variation in subjective style judgments, so we expect less than 100% agreement even between experts. Moving from our initial generic prompt to the final, design-aware instruction set with curated few-shot examples yielded a substantial absolute gain in agreement rate. For future evaluations, we will apply this same approach to compare recommendation algorithms: if one algorithm’s suggested items have a higher proportion of ‘Yes’ labels than another, we will consider it superior. This method provides a clear, consistent metric while acknowledging that style compatibility is not a perfectly objective task.

What actually moved the needle

Three things made the largest difference. First, we anchored the model’s reasoning in specific interior design principles—such as shape, material, color harmony, and scale—which steered it away from vague, generic descriptions and toward concrete, visual justifications grounded in the products’ actual features. Second, a handful of crisp, realistic examples outperformed long rule lists; examples are how the model learns our taste. Third, a strict output contract (JSON only, no extra prose) kept the pipeline resilient and easy to consume downstream.

Impact and what’s next

The immediate impact is the ability to generate style compatibility labels far faster than manual annotation, with measurable agreement to expert judgment. These labels will be used for offline evaluation of recommendation algorithms—an important step toward improving relevance and driving future gains in customer engagement and conversion. While we aren’t yet running this in production recommendations, the system is designed to scale, and the next step would be to integrate it into live search and personalization flows.

On the technical side, we haven’t yet run Gemini 2.5 Pro against our full catalog, but our approach is built for that expansion. Looking ahead, we plan to add active learning so uncertain cases are routed to human reviewers for continuous improvement, monitor seasonal and category-specific shifts in style trends (e.g., color palettes and materials that go in and out of fashion), and expand beyond judging pairs of products to assessing whether entire groups of products—like a full room set—work together cohesively.