Testing is the critical part of software development. Unit testing is usually where it starts. That’s because it is quick and easy to get started and builds the safeguard to protect the future code changes to ensure they are inline with the corner cases. It is also much easier to maintain. The biggest advantage of unit tests comes from forcing the developers to write modularized and testable code.

Given all these benefits, unit testing is almost always the first preference of any developer but when it comes to testing the code areas where we interact with other external systems like databases, messaging systems and cache layers, unit testing is not nearly as helpful. We could mock certain parts then continue to leverage unit testing but we may miss validating certain parts of the code flow because of the mocking. This is where the integration tests really help. They test the complete end to end functionality.

What is Integration Testing?

Testing the functionality of a software system as a combined entity along with its dependent modules is why we call it integration testing. For a web application, which is what we mainly focus on in this article, it includes testing the functionality of the APIs including the web layer, database layer along with any other external systems that application interacts with to serve a user request.

How to Make it Work on Build Tools Such as BuildKite?

The majority of the well known frameworks like Spring Boot and Flask provide easy ways to start the web context and test with various inputs using local setup of the rest of the modules. When it comes to how we run these in the build environments, it becomes a little tricky, even more so once multiple developers start to create their own branch and continue to build different parts of the application and integration tests, all of which we want to run in their own isolated environment.

Here we give a walk through of an application. In this example, we set up integration tests for an application that has a repo hosted in github integrated with BuildKite. The application also interacts with Postgresql running under CloudSQL in GCS and the application itself will be deployed in a Kubernetes cluster.

The sequence of actions we wanted our build job to take were:

- Checkout the corresponding branch from the git repository

- Initialize the database (create if not exists)

- Run database migrations to bring the state of that database to the latest known state

- Load sample data to be used by the integration tests

- Invoke integration tests using the above database credentials

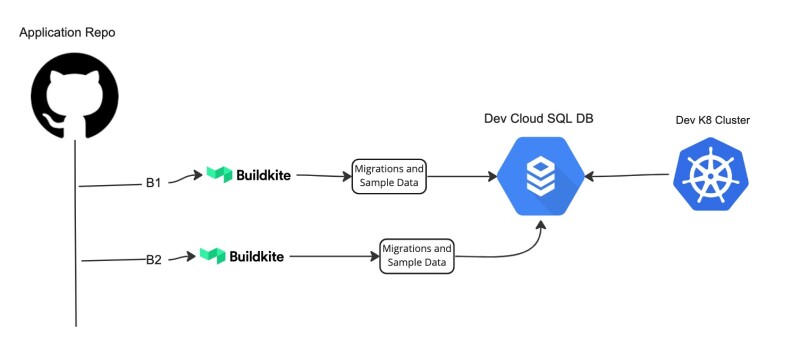

Option 1:

The first option we explored was to use the Dev environment Cloud SQL itself as the source for integration tests to connect and run. This is shown in the below pic.

The pros of this approach were that there was no explicit database setup needed as the dev database was always there for you. But, when it came to running the database migrations, this approach would not work. This was simple and easy to implement if your database change needs were not concurrent and frequent. Otherwise, we wouldn’t recommend this.

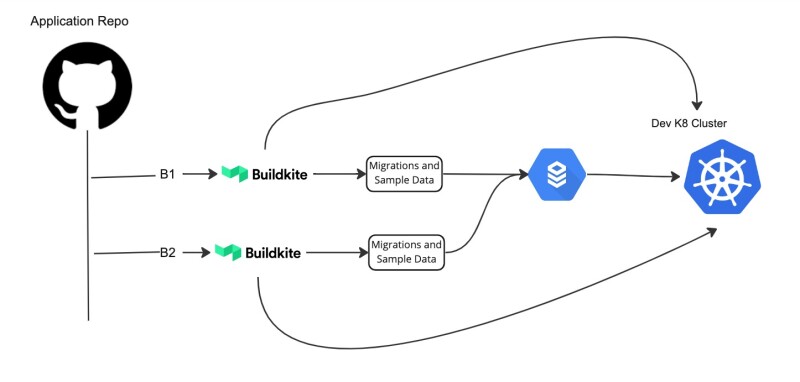

Option 2:

The second approach was more like the first one with one difference. Instead of initializing the spring boot service within the test case, we made use of the dev environment itself for running the tests. The sequence of actions looked like this:

- Checkout the code, compile, package and deploy the build to dev environment while still being in the build kite job

- Initiate the integration tests with the running Dev instance

The pros of this approach were that we really simulated end user requests with real payloads including http layer. There were no mockings. The cons were similar to the first option – you could only keep one version of the database state. Another important point to note was that tests were running in a Dev environment so Buildkite or SonarQube were not aware of any code coverage metrics.

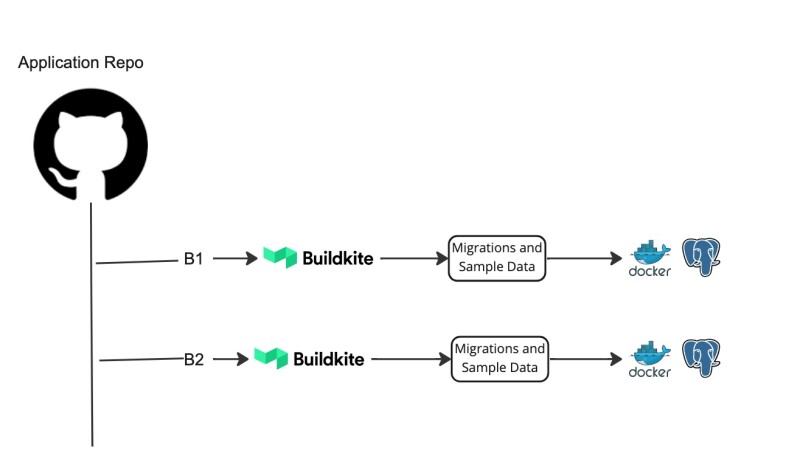

Option 3:

This is what we ended up with. In this approach, each branch build had its own isolated database instance instantiated in a docker running alongside the application docker all within the build steps. We initiated the integration tests, and once these two docker instances were up and running we ran our database migrations and loaded in the sample data.

The branch specific isolated database instances gave a lot of flexibility in terms of what each branch could do to test and validate their branch specific use cases. With a little tweaks in the docker-compose dependency, we were able to achieve this execution flow. Checkout this docker-compose file to better understand how we did it. This is what we recommend as the best approach.

Despite the overhead in maintaining an isolated database docker instance with running migrations to keep the database state in sync and sample data load, we felt like it added a lot of value in terms of validating the application logic and building a strong safeguard in protecting the logic and catching up the code coverage thresholds we set. With a proven solution for maintaining database migrations and sample data preparation, we highly recommend adding this integration step in your pipeline.