When people think of who Wayfair’s customers are, the shoppers using our website immediately come to mind. But the suppliers (aka manufacturers) who list their products on our website are also our customers. Just as Wayfair has customer service associates to assist our shoppers, we have supplier associates that assist our suppliers via SupportHub, our supplier-facing ticketing system based on JIRA.

Much of the work done by our supplier associates requires skill and knowledge, but some of it is boring rote work. For example, our supplier associates manually triage tickets by reviewing unstructured ticket information, looking up information in a database, and entering the corresponding structured data on the ticket. Specifically, they set the supplier ID, the primary question type, and the supplier’s preferred language. We built an LLM-based ticket automation system, an extension to our Wilma product suite, to automate this work so we can reduce response time, lower costs, and raise the accuracy of this triage process.

How Does Wilma Work?

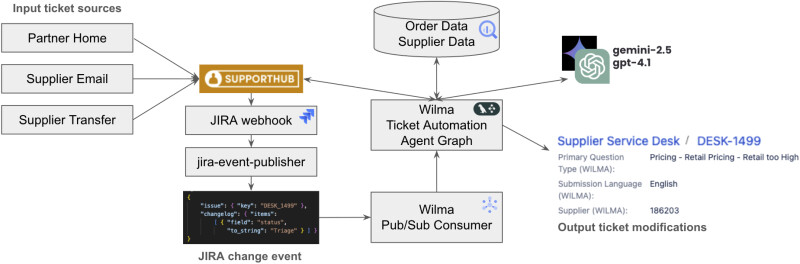

The core of Wilma is a series of LLM and tool calls orchestrated using LangGraph and triggered by events. When a supplier emails Wayfair a new ticket is created in SupportHub, and a webhook publishes an event to a Pub/Sub topic. Our Pub/Sub consumer listens for these events and when it sees one corresponding to the creation of a new ticket, it invokes the LangGraph graph. This graph orchestrates calling LLMs, querying tabular data in BigQuery, and interacting with SupportHub.

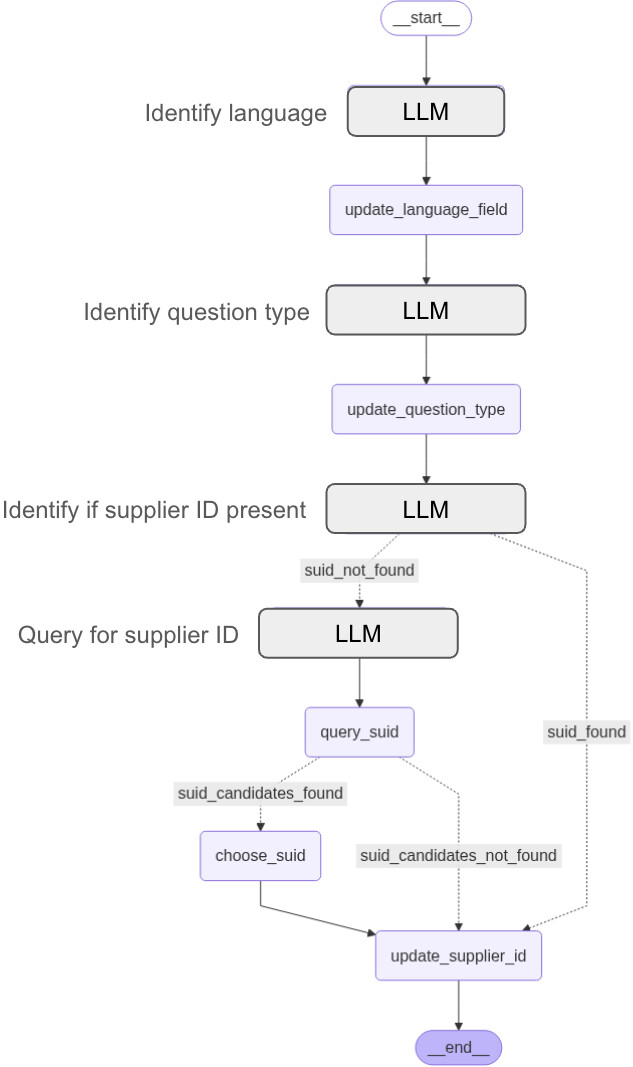

When invoked, the graph takes the following steps:

- Use an LLM to identify the question type (i.e., intent classification)

- Use an LLM to identify the supplier’s preferred language

- Use an LLM agent with access to BigQuery to determine the supplier ID

- Use the JIRA API to update the ticket information

Step #3 is by far the most complicated step as the reasoning+acting

ReAct LLM agent is given a high level task (“Identify the supplier ID given the information on the ticket”) and access to a BigQuery tool. A typical chain of reasoning and action steps might look like this:

- “I don’t see an explicit supplier ID mentioned in the description. I do see a potential SKU, so I will use that to look up the supplier ID.”

- run_query(‘SELECT SupplierID FROM supplier_skus WHERE SKU=”W008787164”’)

- “That query returned no results so I need to try something different. The ticket description contains the supplier’s email address, let me try finding the supplier ID that way.”

- run_query(‘SELECT SupplierID FROM supplier_details WHERE SupplierEmail LIKE “contact@acme.com”’)

- “Success!”

We found that the automation described above was able to achieve better-than-human performance by accurately identifying the question type 93% of the time, the language 98% of the time, and the supplier ID 88% of the time. By comparison, humans correctly identify the question type only 75% of the time (across 81 possible categories). In addition to the accuracy improvement, Wilma reduces the time it takes to process a ticket and allows our associates to focus on complex problem-solving and high-value supplier interactions instead of manual data entry.

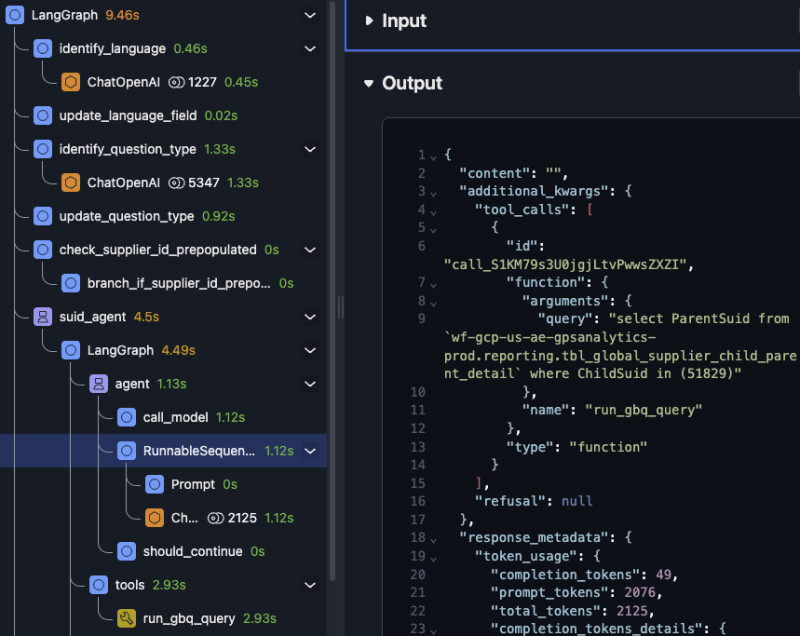

Lessons Learned - Observability

Once the execution graph becomes complicated, being able to visualize and search through steps easily is invaluable. We use Arize for our observability platform and rely on it for both pre-launch development and post-launch debugging. We found that having a purpose-built UI for navigating and searching traces is much more effective than looking at raw output logs. In addition, we use Arize to set up alerts that notify us about anomalous shifts in metrics such as token usage or latency.

Lessons Learned - Agents vs. Workflows (and a Hybrid Approach)

The design of a complex system involving LLMs requires navigating the tradeoff between an agentic approach, where the agents have full decision-making authority, and a largely deterministic workflow-like system where the order of operations is tightly scripted.

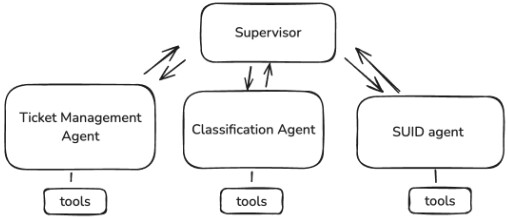

When we first began this work we naively assumed that a fully agentic approach would work. In particular, we created a ticket management agent responsible for interacting with SupportHub, a classification agent to handle the question type and language, and a supplier ID agent to look up the supplier ID. Each agent was given the tools needed to do its job, and a supervisor agent was responsible for coordinating their interaction.

We found that this approach was simple to design and easy to extend. However, we observed failures both in communication (one agent would provide information that another would ignore) and coordination (the supervisor would unnecessarily call an agent multiple times). For example, in one case the classification agent provided the language to the supervisor, the supervisor passed that information to the ticket management agent, and that agent used its “judgment” to incorrectly decide it did not need to call the JIRA API to update the ticket.

To improve the performance of the graph we turned to a workflow approach where, instead of relying on a supervisor agent to orchestrate the operation of subordinate agents, we explicitly defined a series of LLM and function calls needed to accomplish the goal.

We found that this workflow approach was much more reliable and easier to debug, and it cost less because it resulted in fewer LLM calls. However, we observed that it was bad at handling edge cases. For example, if the supplier’s email address had a typo in it (e.g., “supplier@acme.con”), the workflow approach would fail on the first lookup, whereas the agentic approach would be smart enough to observe the error, recognize there might be a typo, and retry with a variation like “supplier@acme.com”.

What we ultimately found worked best was a hybrid approach consisting of a workflow where one of the nodes within the workflow was itself an agent for looking up the supplier ID. Specifically, we replaced the bottom part of the workflow with a ReAct LLM agent that has access to a BigQuery tool, allowing it to try different querying strategies and reformulate its queries based on the results it observed. This gave us the best of both worlds, deterministic guarantees about the order of operations, but also intelligent error handling thanks to the agent’s ability to interpret and overcome edge case errors.

Future Plans

Our experience with Wilma offered valuable insights into designing LLM-powered systems. Wayfair has many other business processes where humans are doing relatively simple, repetitive tasks, ranging from finance to logistics to customer service. The ticket triage system described in this blog post is just one instance of the kind of LLM-based automation we have put into practice. For example, we also have a system that monitors customer-supplier interactions and flags when intervention by a Wayfair associate is needed.

As models (and our expertise in using them) improve, we hope to tackle more and more complex problems, allowing our associates to focus on high-value tasks that better leverage their expertise and skill. Stay tuned for an upcoming blog post on our application of LLM agents to the complex and high stakes world of credit disputes!

The authors would like to acknowledge and thank Abhishek Verma, Graham Ganssle, Erin McGuire, Claire Perreault, and Janet Yue for their contributions to Wilma.